Why Email A/B Testing Should Be Your First Step as a Marketer

When you step foot in the enthralling world of CRO, we are inundated with a gracious sprinkling of terminologies – “Landing Page Optimization”, “A/A”, “A/B/N”, “Above The Fold”, “Below The Fold”, and so on so forth!

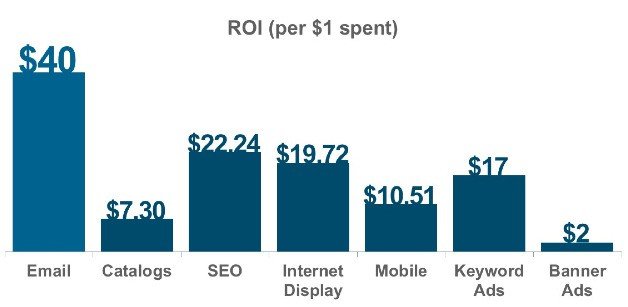

We seldom consider A/B testing our email marketing campaigns. Year after year, email marketing campaigns deliver the highest ROI across all acquisition channels. Therefore, it’s sensible to pay attention to addressing ways that can enhance your email marketing efforts that yield you even higher ROI.

In this blog post, we will discuss how you can do so by incorporating A/B testing best practices, methodologies, and mental models.

Download Free: A/B Testing Guide

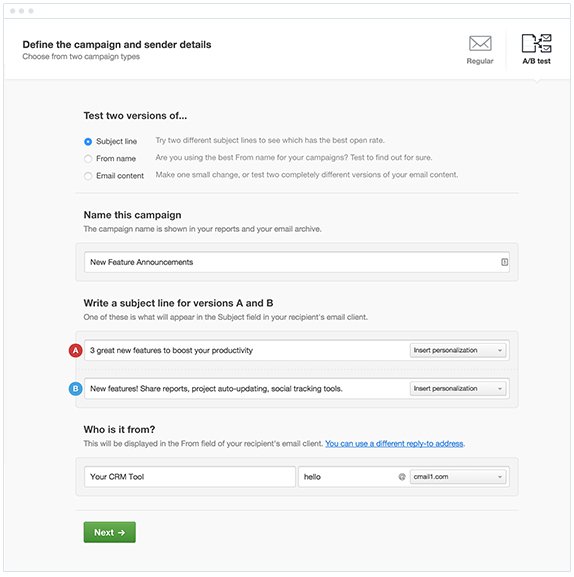

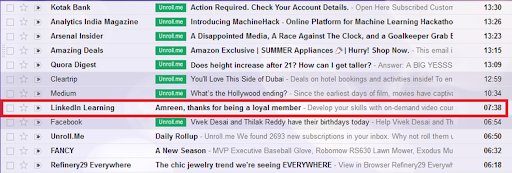

Image Source: Capterra

What is email marketing?

Email marketing is a direct form of marketing that utilizes emails to promote a business. It’s a form of relationship marketing that most brands do to directly engage with their potential customers and inform and update them.

Why email marketing?

Email beats social when it comes to customer acquisition by 40x, according to a McKinsey report. Your prospect takes your brand more seriously over email communication as compared to when they engage on social media. Yes, email is popular.

Now, let’s understand how the game of email marketing is going strong in the world driven by social media. A study by ExactTarget found that 91% of people use smartphones to check their email as compared to 75% of people who use their smartphones for social media.

Not leveraging such a group for your email marketing strategy means missing out on a potential channel, which is extensively used across the globe.

As compared to other forms of marketing, email marketing is a very personalized form of marketing to reach out and engage with your target customers. It is cost-effective, and the associated ROIs are higher as compared with other forms of marketing, such as display ads and other traditional ways.

Email marketing enables businesses to not only reach out to targeted masses at a rate of nearly nothing per customer but also to track the metrics easily. The typical metrics for a successful email marketing campaign are open and click-through rates. To maximize your open, click, and response rate, you need to design your campaigns carefully.

You can do this by testing your designed campaigns to figure out what works best for you.

Why should you A/B test emails?

A/B testing is a great tool to help improve your email marketing performance— but only if you know what you’re doing.

Experimenting with your email campaigns would allow you to compare and contrast the elements that would gauge their impact on your subscribers’ reactions.

A/B testing can be done by forming a hypothesis and then formulating a test to see the results.

With A/B testing, you can:

Increase the open and click-through rates:

The tool allows you to identify common factors and trends that can help increase open and click-through rates. For example, make your emails mobile-friendly, show relevant information in images, and use a responsive email design that will allow it to all screen sizes.

Increase conversion rate and generate revenue:

With high click-through rates, there are chances that your subscribers are clicking on your website and engaging with the call-to-action (CTA) buttons such as filling out forms. You may identify how many open emails led to sales or leads.

Get clarity about your target group’s expectations:

Your communication should be relevant to your target group’s interest. You need to know them well to understand their behavior, and hence, their expectations. Craft personalized emails by addressing them with their names.

You can do so by creating targeted content for the segmented list and addressing their pain points with a solution by privately arriving in their mailbox.

Save time and money:

A lot of money, energy, and time is spent on creating landing pages, and forms, and sending emails to a broad audience. Through A/B testing, you can formulate the right recipe for a successful campaign by implementing incremental changes, leading to statistically significant test results.

Want to get started with email marketing? Here’s an insightful video you must watch.

What elements should you A/B test in your emails?

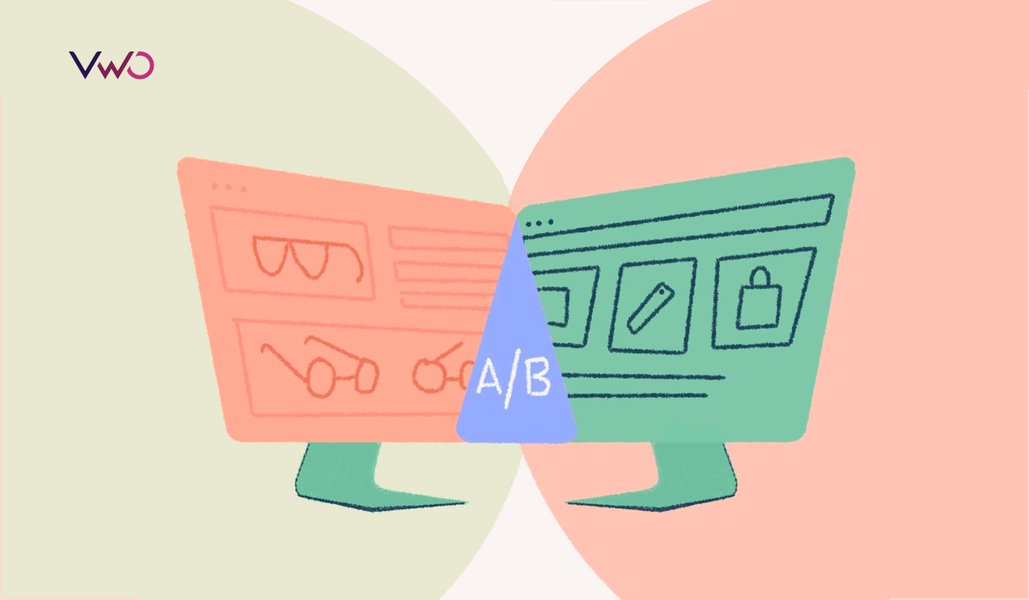

A/B testing or split testing is the practice of showing two variants of the same web page to different segments of visitors at the same time and comparing which variant drives more conversions. Typically, the one that gives higher conversions is the winning variant, applying, which can help you optimize your site for better results.

You can A/B test the email campaigns by sending a portion of your list one version of an email and the other a different version while you watch for changes in your open rate, click-through rate, or other KPI.

The elements that you can A/B test are:

1. Email subject line:

It is generally recommended that email subject lines should be concise, containing no more than 9 words and around 60 characters. Based on this, you can test the subject line length and decide whether it should be brief or detailed. Experiment with posing questions versus using straightforward statements in your subject lines. Next, see which of the two different subject lines improves the open rate.

For instance, you can try “Want to boost your business with A/B testing? We can help” instead of a direct statement. A/B testing email subject lines with different tones can help determine whether a conversational, curious, or professional approach improves open rates.

You can also leverage psychological principles like urgency, social proof, and FOMO to enhance the appeal of your subject line. Just be sure to avoid misleading your audience and maintain consistency in your messaging throughout the email.

2. Preheader:

Include a preheader (also called a preview text), and test it against preheader subject lines you may hypothesize would help increase the open rate. You can also try personalizing the preheaders by adding the first name of the email recipient. Here are some ideas you can try to test your email preheader:

- Incorporate copy from the first line of the email: Example – “Hello (name), you can now explore our latest collection of smart gadgets designed to simplify your life”

- Give a concise summary: Example – “Discover how you can upgrade your life with our smart gadgets”

- Include a CTA: Example – “Unlock the best discount deal! Get 15% off on your next purchase

- Mention company name to signify potential association: Example – “Unveil the geek in you with Henderson’s gadgets!”

3. Different times and days:

You must test different time zones as you may be catering to a global audience, which means that the send time of an email will affect your open rate.

Send emails on weekdays as people are most likely to check their emails on weekdays as compared to weekends. For example, if you always send your newsletter on Tuesdays, try sending it on Wednesdays at the same time to see what happens.

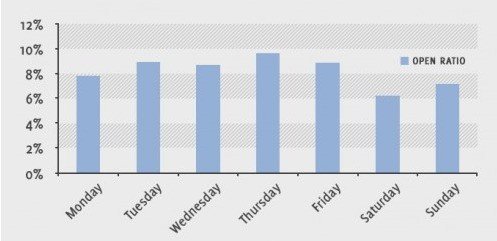

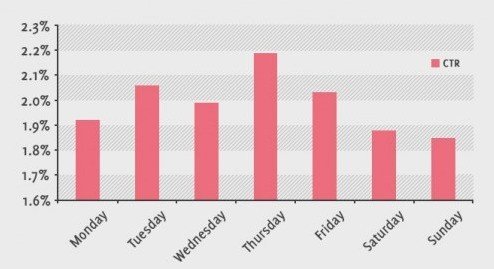

Many studies have been done on the best days and times to send emails. Here’s a great display of open and click-through rates from GetResponse, analyzing 21 million US-based emails.

Data sourced from GetResponse

Also, testing periods of time to send a message on users abandoning the site would help you optimize the open and click-through rates, which eventually affect your sales.

4. Visuals:

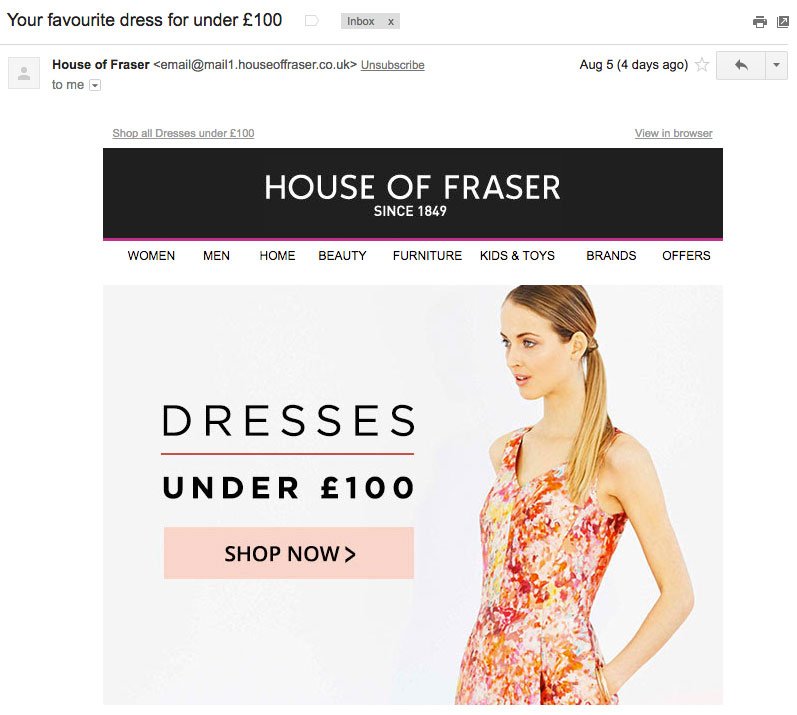

Since our brain reacts better to visuals than text, you make your copy super engaging by incorporating compelling visuals. Below are some testing ideas related to visuals you can try out:

Image placement:

How the position of the image – top, center, or bottom – impacts the recipient’s response and engagement.

Image type:

Determine whether product photos, lifestyle photos, infographics, or illustrations resonate the best with your audiences.

Image size:

Test various image sizes to find the optimal dimensions that encourage clicks and conversions.

Image CTAs:

Place CTA buttons within images vs separate text-based CTAs.

5. Personalization:

There is nothing like personalizing your email design as per the expectations of your prospects. You can only accomplish this through effective communication. Your audience actually listens to you when you address them by their names, and greet them through the right channel, at the right moment, with the right message. They are most likely to engage with your content if they entrust you with the solutions to their pain points.

6. Content:

Content is the king! Your content is the copy you write, images, videos, gifs, and their placement in a particular layout to make your email look engaging.

Below are a few interesting parameters that you should test in your content:

- Length: You must A/B test your copy for its length to understand and conclude if your audience prefers a short-form copy with links or a long-form copy to delve deeper.

- Specific or generic: You don’t know what would work best for your target group, so it is recommended that you test your copies as specific and generic and see which one gets more traction.

- Positive, neutral, or provocative: By incorporating positivity in your copy, you are engaging with your reader’s brain in a powerful way that would result in a better understanding of your message. Your readers are more likely to convert if you engage them and motivate them through a robust and well-written copy.

7. CTA and buttons:

It is the CTA button that tells the recipient what to do next after reading your email content. It has to grab their attention and make them want to click to proceed further. Your call-to-action buttons can be tested against body copy, color, layout, and placement:

- Copy: By testing generic CTA’s such as “Buy more” against specific CTAs like “Get your custom denim here,” you might be able to understand which works wonders for your brand.

- Color: Your CTA button must complement the rest of your email body and stand out. For example, a CTA button in green on a white background is very impactful.

- Placement: Try out different placement options like within the image or outside the image, left, right, or centered-aligned.

- Button vs text: When you are crafting CTAs in your email campaigns, you have two choices – incorporating CTA buttons or using hypertext links. You must test to determine which option is most effective for your specific email campaign.

Download Free: A/B Testing Guide

Email A/B testing best practices

Your email marketing solution should offer you A/B testing functionality. Still, even if it doesn’t, you can create your testing campaigns by manually segmenting lists and creating separate campaigns for each.

Review the following email A/B testing best practices to improve results from your email campaigns:

Tip #1: Start by formulating a hypothesis

If you make changes to an email, run an A/B test, and find out that one variation performs better than another, that’s a start. But if you don’t know which elements to test, why do you feel the need to run a test, and what’s the goal you want to achieve, you can’t go ahead.

So, start by formulating a hypothesis and define what you want to improve and why you think the changes will contribute positively to your desired outcome.

Tip #2: Test high-impact and low-effort elements

While A/B testing, make sure you do not get overwhelmed and start testing every element. You must try and test elements that you feel can pose a high impact on your email open rate. Work primarily on your CTAs, headlines, visual hierarchy, etc., that can be quickly executed in the test.

You can, later on, shift to testing the timing of your email automation flows–the actions you use as triggers or the way you segment your recipients to gauge their impact on the open rates.

Tip #3: Focus on frequently sent emails first

Focus on the emails that you send frequently. For instance, your weekly newsletter emails. Pay much attention to the kind of templates you choose, the headlines you write, the type of content you wish to input, the placement of CTAs, etc.

When you test these emails, you can swiftly apply the insights gained to your next email cadence. On the other hand, with occasional emails, such as event invitations, you may need to wait until the next occurrence of the event, which could be a year or longer to implement the learnings.

By refining your frequent emails, you can improve conversion rates from your email campaigns.

Tip #4: Test broadcast, segmented, automated, and transactional messages

Test your broadcast and segmented messages and ensure you’re extending both the practice of email A/B testing and noting any learnings you’ve discovered, to the other types of emails you send to the sample size.

Tip #5: Choose the right sample size

For larger email lists exceeding 1,000 subscribers, the 80/20 rule can be implemented when choosing the sample size. This means focusing on the 20% that generates 80% of the results.

In A/B testing, this means sending one variant to 10% of the email recipients and the other to another 10%. The remaining 80% see the better-performing variant based on initial results. This approach is crucial for statistical significance and accurate result

Tip #6: Consider the potential impact of timing on email performance

No matter how excited you are to kick off a new email A/B testing program, be cautious if that means starting around a period of irregular seasonal or industry-specific activity. Reaching incorrect conclusions from abnormal spikes of activity won’t do your future testing any good.

Tip #7: Test one variable at a time and keep a log of your test

It is wise to keep one element at a time as you can precisely zero in on the change you made whose impact you are measuring through testing.

When you test one variable in the same email at a time, you can confidently attribute the uplift in the metric to the specific change in that variable. For example, if you changed both product image and product discount in an email, it would become difficult to attribute the entire difference to just one element.

However, multivariate testing is an option if you want to test more than one variable. Make sure you choose an email marketing software that supports various types of tests, segmentation, integrations, and ease of use.

Also, do not forget to record your findings and learnings from every variable you tested, so that you can go back to analyze them and modify your campaigns.

Watch a webinar to know how to convert more users from your email campaign:

Conclusion

From creating hypotheses to writing engaging crafty email messages that warrant a desired open rate, it is vital to A/B test every new change that you want to deploy in your email marketing campaigns. Also, do remember that an increase in your conversion rate is a result of a combination of multiple marketing campaigns like retargeting via push notifications besides email. A mindful distribution of resources among these two tools can positively impact your bottom line and improve overall conversions.

Frequently Asked Questions

Email A/B testing or split testing is used in email marketing to compare two or more versions of an email to determine which one performs better in achieving goals like open rates and click-through rates. A single element like the subject line, content, images, or CTA, is changed and the test is run to understand which element the change in metric can be attributed to. By analyzing these metrics, marketers can refine their email campaigns and make data-driven decisions.

To run an A/B test email campaign, you can follow the below steps:

Set your goal: Clearly state the goals of your test, such as improving open rates, click-through rates, and so on.

Segment your audiences: Divide your email list to determine who will see the control and which group should see the variant.

Create variations: Design a different test version of an element. For example, the CTA button can remain unchanged in the control, while its copy can be changed in the variant.

Send the emails: Use one of the leading email A/B testing tools to send the two email versions to respective groups.

Analyze results: Asses key metrics and compare the performance of A and B. Identify the winning version based on defined objectives.

Implement changes: Implement the winning version’s improvements to future email campaigns.

The sample size for email A/B testing depends on the size of your email list and the desired level of statistical confidence. Generally, a sample size of at least a few thousand recipients split evenly between the control and variation groups is suggested.

However, larger lists may require smaller percentages for testing. Online calculators and email A/B testing tools can help determine the specific sample size needed to detect meaningful differences in your email metrics with confidence.

A/B testing emails enhance open and click-through rates by optimizing subject lines, images, content, CTA buttons, and other important elements. Higher click-through rates can boost conversions and revenue, as you track email-to-sales conversions.

Understanding your target audience’s expectations leads to personalized content and customized solutions. A/B testing also saves time and money by adjusting campaigns with incremental changes and delivering meaningful results that positively contribute to a company’s bottom line.