How AMD Used VWO To Increase Social Shares

About Advanced Micro Devices (AMD)

Advanced Micro Devices (AMD) is one of the world’s leading semiconductor companies that develops computer processors and related technologies for business and consumer markets.

Goals: Increase Social Sharing of News Published on AMD.com through a ShareThis Icon/Link

AMD regularly pushes news and other updates around its offerings through AMD.com. They wanted visitors to share such content on various social media to enhance reach.

To make sharing easier, AMD had embedded a ShareThis link/icon that is embedded into the pages of the site. On the basis of site metrics and how information was being shared with different social platforms, AMD’s Online Marketing team believed that more could be done. It selected VWO to assist in improving the number of shares.

AMD’s Online Marketing team wondered if the appearance of the ShareThis icon/link and existing placement was optimal for users, as it was located in the footer of all AMD.com pages. They felt that making the ShareThis icon/link more visible would help make it more widely used.

AMD’s Online Marketing team decided to use A/B testing to understand user behaviour and interactions with these icons and thus determine the best possible combination of position and appearance of the icons.

The testing was implemented and controlled by VWO.

Tests run: A/B Testing Helped Determine Icon Appearance and Optimal Placement

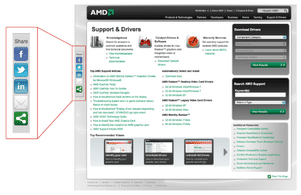

Working with VWO tools, the AMD Online Marketing team created and tested 6 variations (including the control version) with different icons and placement. The combinations tested various positions (left, right, bottom) and appearances (icon/link, large chicklets, small chicklets) on one of the more prominently visited and shared sections of the website, Support & Drivers (http://support.amd.com).

All 6x variations were deployed on the entire support.amd.com subdomain and ran for 5 days. The test was served to visitors for the first two days, then increased to 35% for the next two days, and then increased to 100% on the last day. The last day also coincided with the launch of a new software driver which typically generates more traffic.

After visitors saw a particular variation, they continued to see the same variation to ensure a consistent user experience. Based on the testing, the AMD Online Marketing team recommended using the left-position chicklet version with dynamic adjustment based on the browser window size.

Conclusion: 3600% Increase in Social Sharing with No Adverse Impact on Overall Engagement

The test findings proved to be statistically significant, demonstrating up to a 36x increase in social sharing on the tested site, compared to the original site configuration. The A/B tests also helped the team objectively conclude that the bottom chicklet and link variations were less productive. More important, the tests helped establish that the variations did NOT impact the overall engagement on the pages (average engagement conversion was over 23%).

The final result of the testing was in line with expectations, but the wide range of results from variation testing was unexpected. This provided strong support for the continued use of A/B testing for future optimization as well. VWO makes A/B testing easy. Take a free trial to explore the features in detail and decide for yourself.

AMD’s Online Marketing team had this to say about VWO:

“VWO has proven to be a great asset to the AMD Online Marketing team by providing a scalable and user-friendly solution that helps to clearly demonstrate user interaction and behavior. The ability to run optimization tests on a regular basis with minimal effort is critical to AMD’s global online presence and in providing an optimal user experience for AMD.com visitors”.

Location

Santa Clara, US

Industry

Manufacturing

Impact

3,600% increase in Social shares